This site is also available in: Deutsch (German)

Migration of an MS Access application to Angular/Spring Boot: A field report

In the rapidly evolving world of corporate IT, many companies are faced with the challenge of modernizing outdated systems. In recent months, we have been in contact with several companies that are facing the challenge of replacing MS Access applications in a timely manner and making them “cloud ready”. We recently undertook our first project of this kind and replaced a long-standing MS Access application with a modern web application using Angular and Spring Boot. This article highlights the technical aspects and challenges of this project.

Initial situation and motives

The existing MS Access solution had served well for years, but had reached its limits:

- Concentration of expertise on a small number of employees

- Foreseeable end-of-life of MS Access

- Limited scalability with increasing number of users

- New requirements for cross-company or cross-cantonal data sharing

Technical implementation

Before we could devote ourselves to the design of the architecture, a number of questions had to be clarified with regard to the framework conditions and, above all, non-functional requirements. The purely functional requirements, on the other hand, were quite obvious: it should (in the first step) offer the same range of functions. Just a little nicer and perhaps a little faster.

The framework conditions were quickly set. The customer relied on a Kubernetes infrastructure, had an executable CI/CD environment based on Gitlab and used Helm for templating (and release management). The new application should fit seamlessly into the setup. Databases (not MS Access) were also provided promptly using an internal ordering process.

As the company maintains an internal component library based on Angular, the choice of frontend framework was also obvious. Other departments in the same company were increasingly relying on Spring Boot backends, which essentially left no questions unanswered.

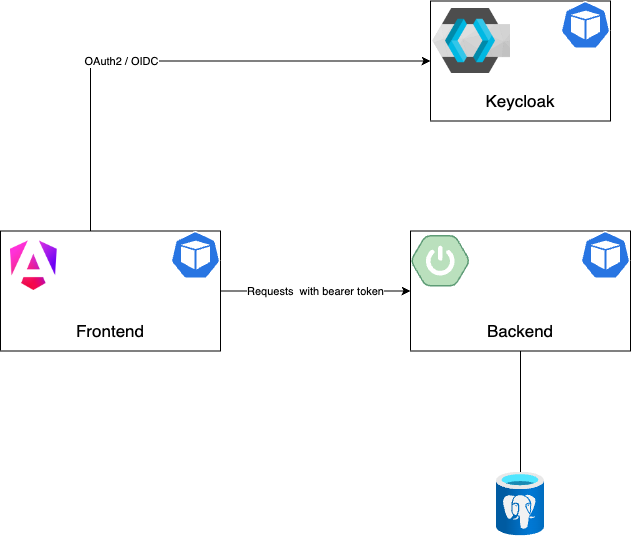

System architecture

The system architecture is relatively simple. An Angular-based frontend is delivered to the client via an ngix Kubernetes pod. Keycloak is used for authentication (OAuth2 with Open ID Connect). The data is compiled from the database via the Sprint Boot backend and transferred to the client.

Keycloak as well as the Angular frontend and Spring Boot backend run in their own pod. The database was provided by the customer’s storage team – outside the cluster.

Frontend (Angular)

- Implementation of a Single Page Application (SPA)

- Use of internal company design components for a consistent UI

- Keycloak connection via OAuth2/OIDC

- State management with NgRx for effective management of the application state

Backend (Spring Boot)

- Development of RESTful APIs for communication with the frontend

- Implementation of the business logic – modularized according to determined context elements

- Use of Spring Security (JWT token verification, mapping of claims to authorities, protection of REST endpoints)

- Integration of Hibernate ORM for efficient database management

Database

- Migration of tables and data from MS Access to PostgreSQL

- Implementation of database indexes and optimization of queries

Deployment

- Containerization of the application with Docker

- Orchestration with Kubernetes for improved scalability and manageability

- Implementation of a CI/CD pipeline with Jenkins for automated tests and deployments

Challenges and solutions

Data migration

Over the years, a considerable number of data records have accumulated, which had to be transferred to the new database structure. In addition, there were some tables that still contained data records but were no longer actually used. It was reasonable to assume that these were tables from previous releases, which most likely once contributed to the business logic. Such tables had to be identified and omitted when importing them into the new structure.

To make matters worse, some tables used natural keys and a conversion would cause problems for other systems. This had to be taken into account when importing this data (although the new database schema consistently used surrogate keys to avoid having to go through this challenge again).

Ultimately, a straightforward ETL tool was implemented, which extracted the data step by step from the existing MS Access database, converted (transformed) it into the new structure and loaded it into the new database. Finally, it performed some validation tasks to verify a correct transfer.

“Hidden business logic”

The business logic of the forms was primarily mapped in the MS Access solution using macros and Visual Basic code. Some of this logic was much more fragmented than originally expected. Cohesion was low, which meant that understanding of the implemented business logic could only be built up step by step.

This challenge was already recognized and addressed in the analysis. Meanwhile, ideas from Domain Driven Design were used. Bounded context(s) could be derived, entities placed and ultimately linked to the “code snippets” (which contained the business logic). The procedure probably does not quite correspond to the idea of Eric Evans (author of the DDD classic “Domain Driven Design”), but has proven to be a practicable procedure.

User acceptance

One admittedly unexpected challenge – at least at the beginning – was user acceptance. This can probably be explained with an analogy: Anyone who has ever observed a checkout system employee is familiar with this phenomenon. An incredibly large and seemingly complex form appears on the screen, but the employee manages to navigate through the form and complete the process within 1-2 seconds using key combinations and intensive use of the tab key. If this form is now transferred to a new application, the burnt-in patterns no longer work. A similar challenge was identified during the first user tests on the new solution. Design elements that we considered clever and well-placed met with resistance. Placement of elements seemed logical to us, but was annoying for users. And very important: the tab order of the elements(!).

In the end, the feedback led to a number of changes to the new forms, which were ultimately accepted favorably.

Lessons Learned

In retrospect, which aspects contributed significantly to the success of the project?

- Thorough analysis: A detailed examination of the existing application, especially the hidden business logic, is essential.

- Include user feedback: Involving end users early on can uncover unexpected user adoption challenges.

- Flexibility in implementation: The willingness to adapt designs and functions based on user feedback is crucial for success.

- Plan data migration: The complexity of data migration should not be underestimated. A solid ETL process with validation steps is important.

- Solid software/architecture design: You should always bear in mind that everything we write today will have to be maintained at some point. Cohesion-free code snippets as in the original solution make this significantly more difficult.

- Continuous training: The development team should be trained in both modern and older technologies in order to modernize legacy systems effectively.

Conclusion

The migration from MS Access to a modern web application was a complex but rewarding undertaking. The new architecture offers improved scalability, maintainability and future-proofing. At the same time, the project presented the development team with new challenges and provided an opportunity for further training in modern and old technologies.

As always, the devil is in the detail. This was particularly true of the scattered business logic, which had to be collected and categorized in advance. For companies facing a similar task, thorough planning and step-by-step implementation are recommended. This sounds like “business as usual”, but against the background that an existing application “only” needs to be ported to another technology, this is often underestimated by outsiders. People are reluctant to port legacy applications. In my experience, it is never a pure porting process. There are still the steps: Analyze what’s there, consider what should be kept, clean up what’s there, make sure you understand it, port it and test the result (compare it to the original solution).

Investing in modern technologies can lead to significant long-term benefits in terms of efficiency and competitiveness.

PS: There is also a variant with Power Automate. Here is a field report on the migration of an MS Access application to Power Automate.